Favicon Fun

by Ben Ubois

![]()

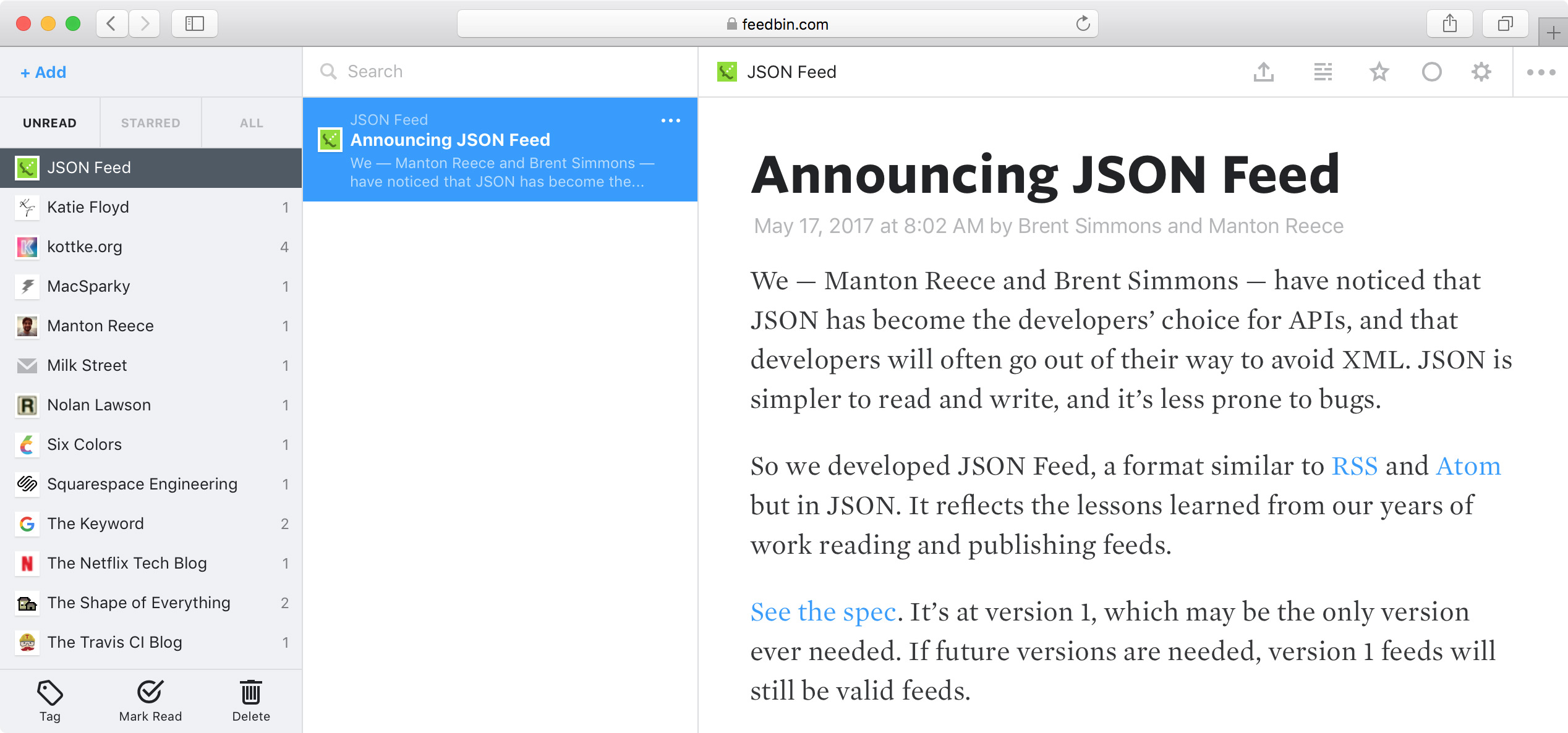

Favicons are an important and often over-looked part of publishing a website. Favicons help to distinguish open tabs in web browsers and make the source of an article instantly recognizable in Feedbin.

Unfortunately, not every website publisher takes the time to create a favicon for their site. In this case, Feedbin uses a generic icon as a placeholder. The problem with this approach is that I feel it de-emphasizes feeds that do not have a custom favicon and provides no way of distinguishing the source.

To improve this situation, Feedbin now generates a unique icon color for every site that uses the default favicon. This gives equal emphasis to all sites, whether they have a favicon or not.

Colors are generated using Color Hash, with the hostname as the seed. This way the color will always be the same for the same domain.

For example:

var colorHash = new ColorHash()

colorHash.hex("feedbin.com")

Produces the color #538EAC: ![]()

If you have a website, I’d recommend adding a favicon. They’re easy to implement. Even if you already have one, it’s possible it could be improved. With the advent of retina screens, it’s important to double the size to 32×32 pixels so it does not look blurry.